Concurrency control is a fundamental aspect of database management that ensures multiple operations can be executed simultaneously without interfering with each other. In today’s world of high-performance applications and real-time data processing, efficient concurrency control is crucial for maintaining responsiveness and scalability.

By allowing multiple operations to occur simultaneously, WiredTiger enhances the ability to handle high workloads efficiently. This chapter explores two key features that enable effective concurrency in MongoDB: document-level concurrency and multi-version concurrency control (MVCC).

Storage enignes have direct control over concurrency.

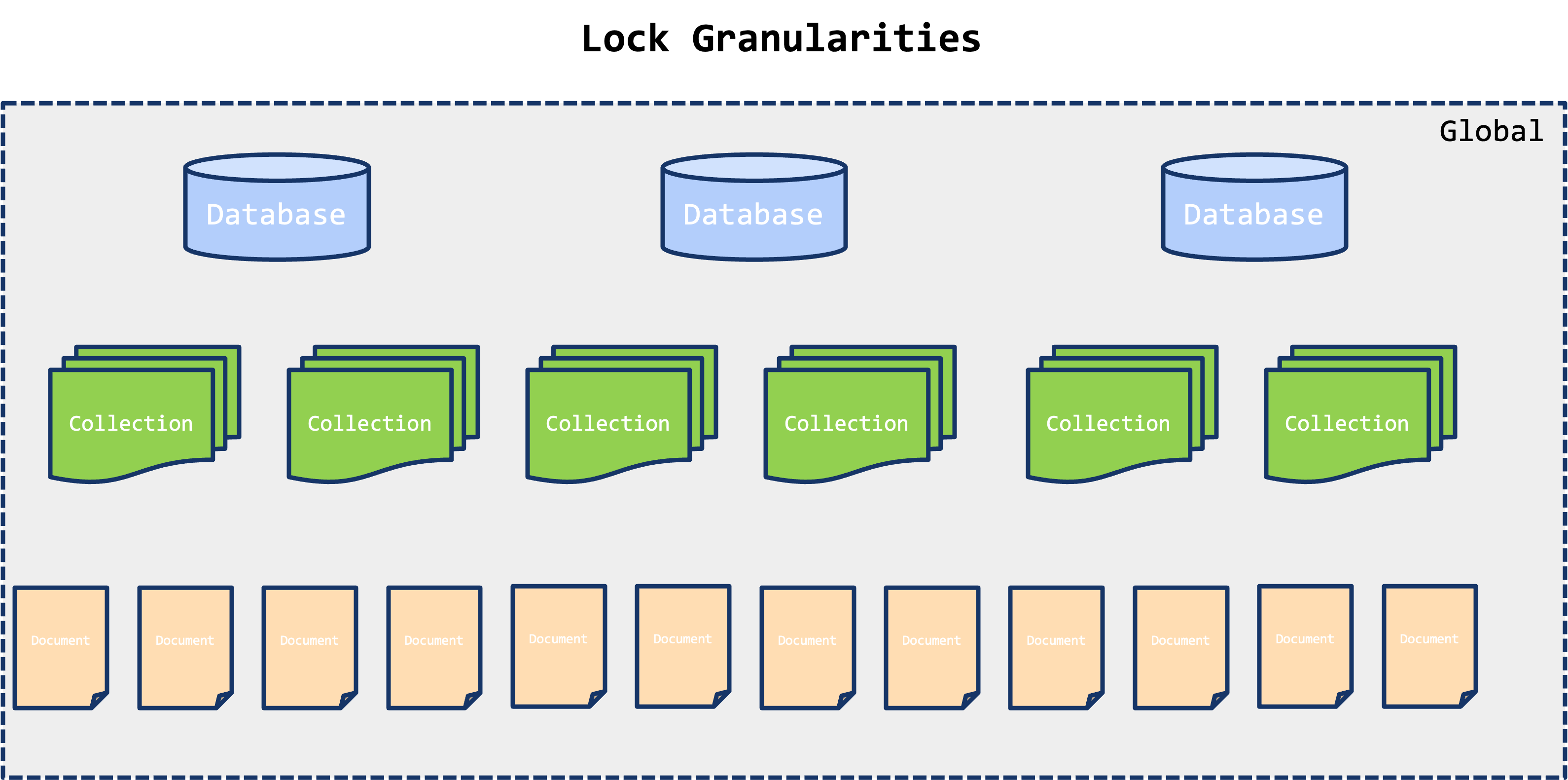

2.1.1. Lock Granularities

WiredTiger introduces a significant improvement in concurrency control over the previous MMAPv1 storage engine by supporting document-level locking. This enhancement allows for more granular control of data access, enabling MongoDB to optimize performance in multi-user environments.

In MongoDB, the concept of lock granularity refers to the size or scope of a lock applied during database operations. The database architecture supports various levels of locking granularity, ranging from large (entire databases) to small (individual documents). This flexibility in lock granularity is essential for optimizing performance and concurrency in a multi-user environment.

Database Level:

- At the highest level of granularity, a lock can be applied to an entire database. This type of lock would restrict access to all collections and documents within that database. However, such a broad lock is rarely used in MongoDB because it severely limits concurrent access and can lead to significant bottlenecks.

Collection Level:

- A step down in granularity is the collection-level lock, which restricts access to all documents within a specific collection. While this allows operations to proceed concurrently on different collections within the same database, it still limits concurrency within the collection itself.

Document Level:

- Document-level locking is the most granular approach and is a key feature of the WiredTiger storage engine used by MongoDB. This level of locking allows transactions to lock individual documents, enabling multiple operations to be performed concurrently on different documents within the same collection. This fine-grained locking minimizes contention and maximizes throughput by allowing other transactions to access and modify different documents simultaneously.

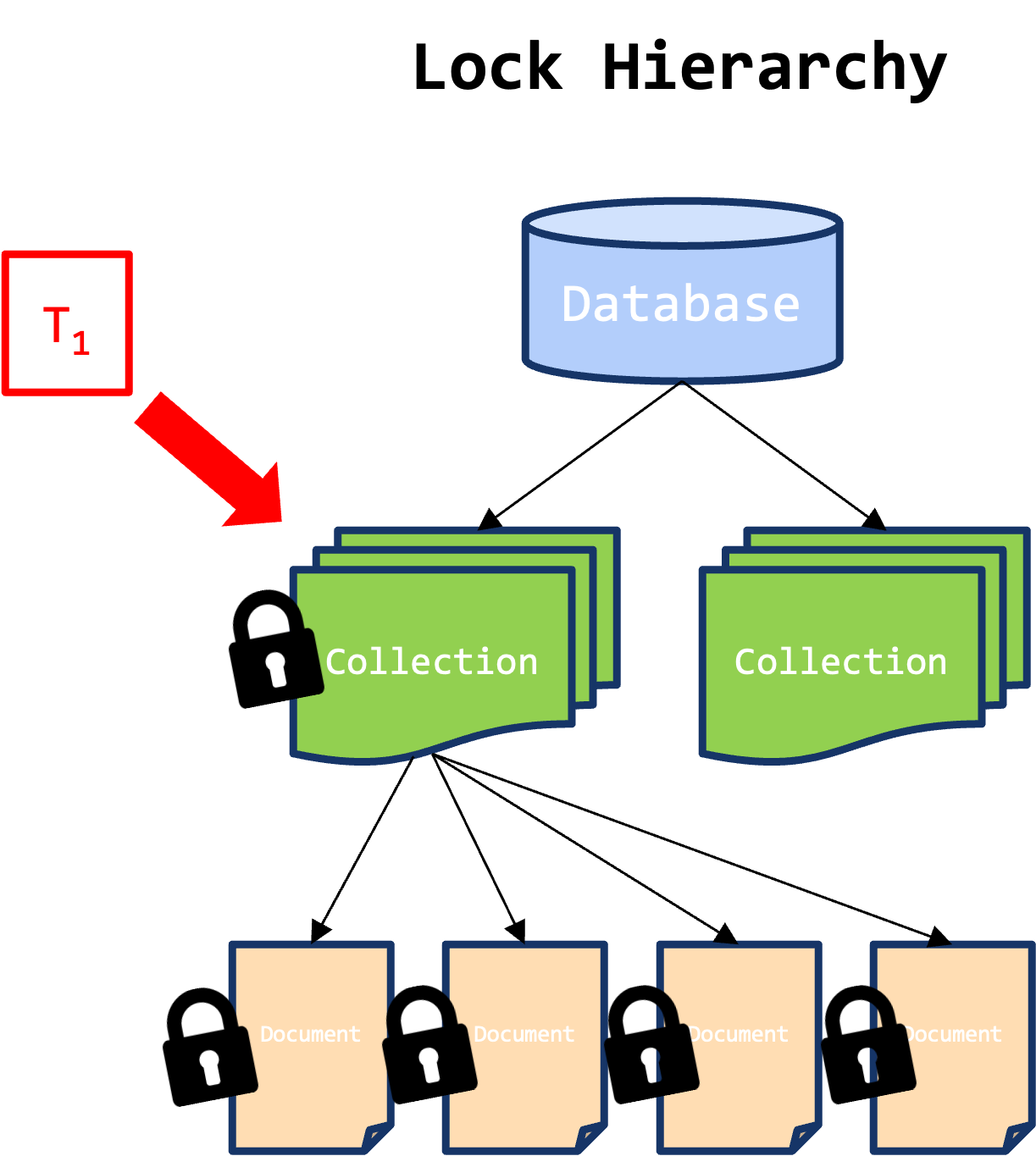

The concept of lock hierarchy involves the sequence or levels at which locks are applied to manage concurrent operations efficiently. This hierarchy ensures that locks are only applied where necessary, reducing the time and scope of locks to improve performance.

2.1.2. Lock Hierarchy

- Consider a transaction

T₁that needs to perform operations on specific documents within a collection. The transaction begins by acquiring a lock at the collection level, which gives it access to the collection containing the desired documents. - Within the collection, the transaction acquires locks on individual documents that it needs to read or modify. These document-level locks ensure that the transaction can proceed without interference from other transactions attempting to modify the same documents.

- This approach allows other transactions to concurrently access and modify different documents, enhancing overall system performance and scalability.

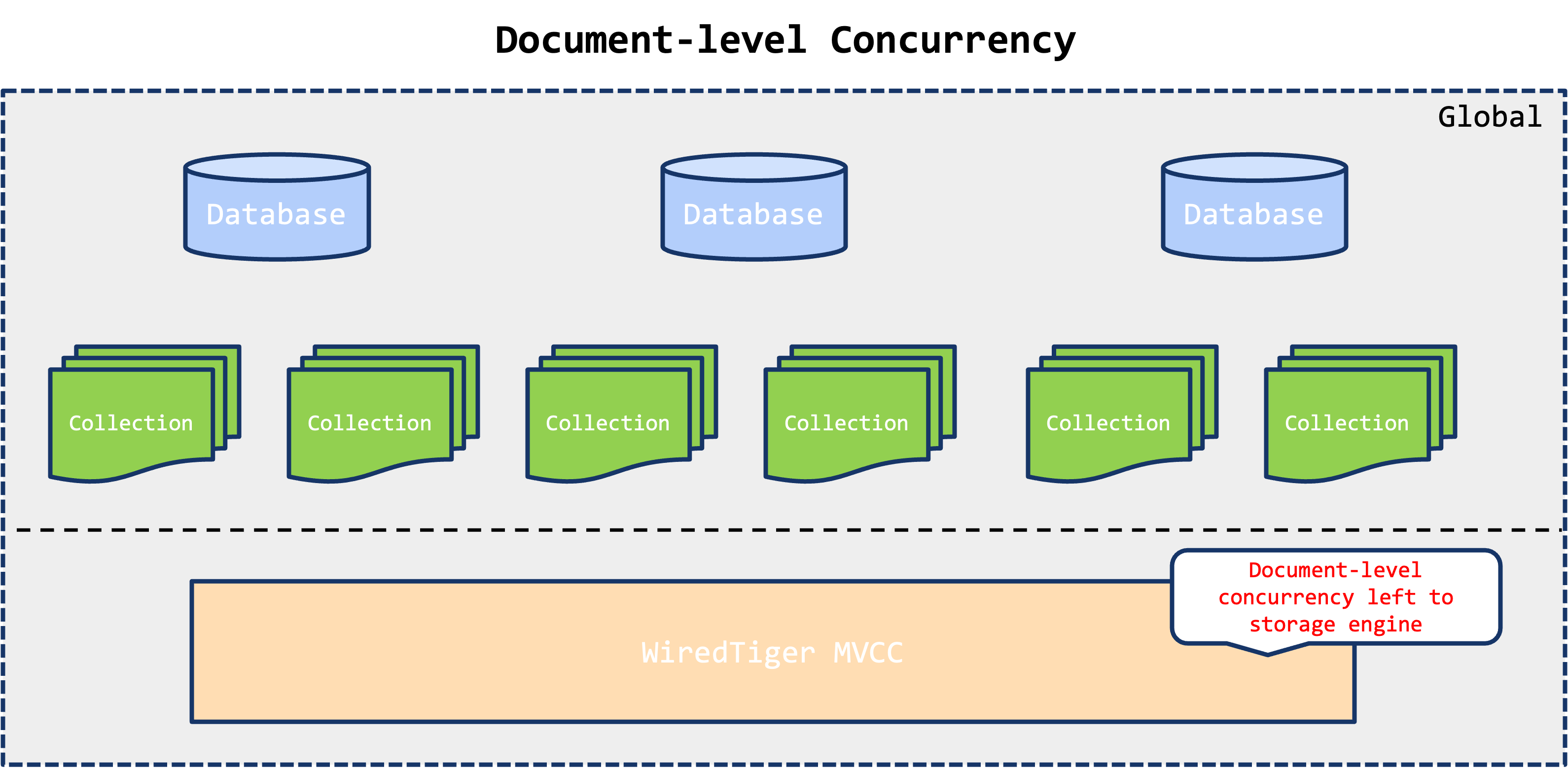

2.1.3. Document-Level Concurrency

WiredTiger, the default storage engine in MongoDB, uses sophisticated concurrency control mechanisms to improve performance and scalability, particularly through document-level concurrency. Here’s how it operates:

- Mutexes and Read-Write (RW) Locks:

- WiredTiger employs mutexes and RW locks to manage concurrent access to documents. These locks provide the necessary control to ensure safe read and write operations in a multi-threaded environment.

- Mutexes are used to ensure exclusive access to critical sections of the code or resources, preventing multiple threads from entering the section simultaneously.

- Read-Write (RW) Locks allow multiple threads to read a document concurrently (shared access) while ensuring that only one thread can write to a document at a time (exclusive access).

- MVCC:

- WiredTiger uses MVCC to manage concurrent access by maintaining multiple versions of a document.

- This allows readers to access the last committed version of a document, ensuring consistent data views without blocking ongoing write operations.

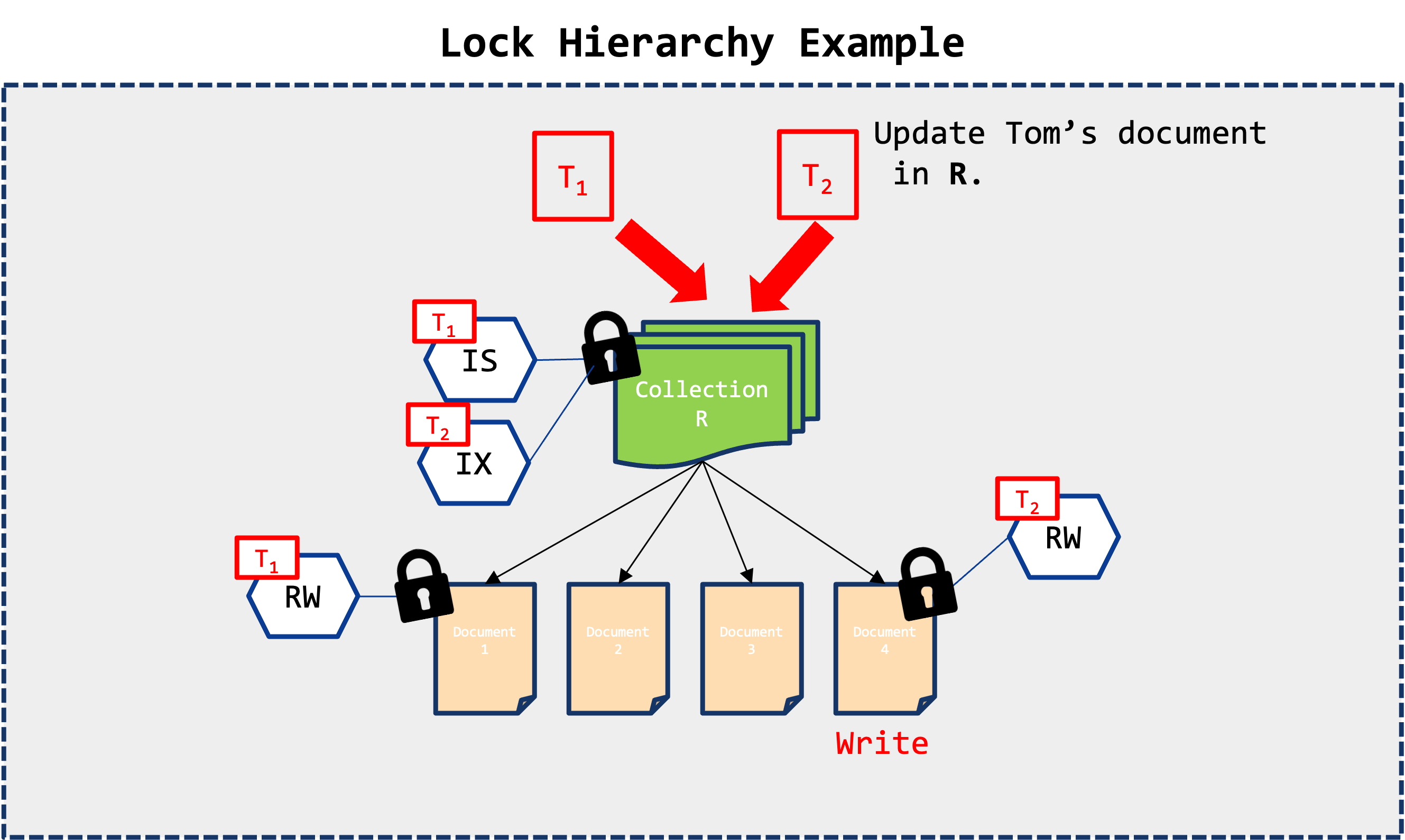

- Transaction

T₁reads a document in CollectionR. It acquires anISlock on the collection and anRWlock on the document. This allowsT₁to read the document while permitting other transactions to concurrently read from the same collection or document.

- While

T₁continues its read, TransactionT₂updates a different document in the same collection.T₂acquires anIXlock on the collection and anRwlock on the document it updates. This enables both transactions to proceed without blocking each other, maximizing throughput.

2.1.2. MVCC

Writers do not block readers. Readers do not block writers.

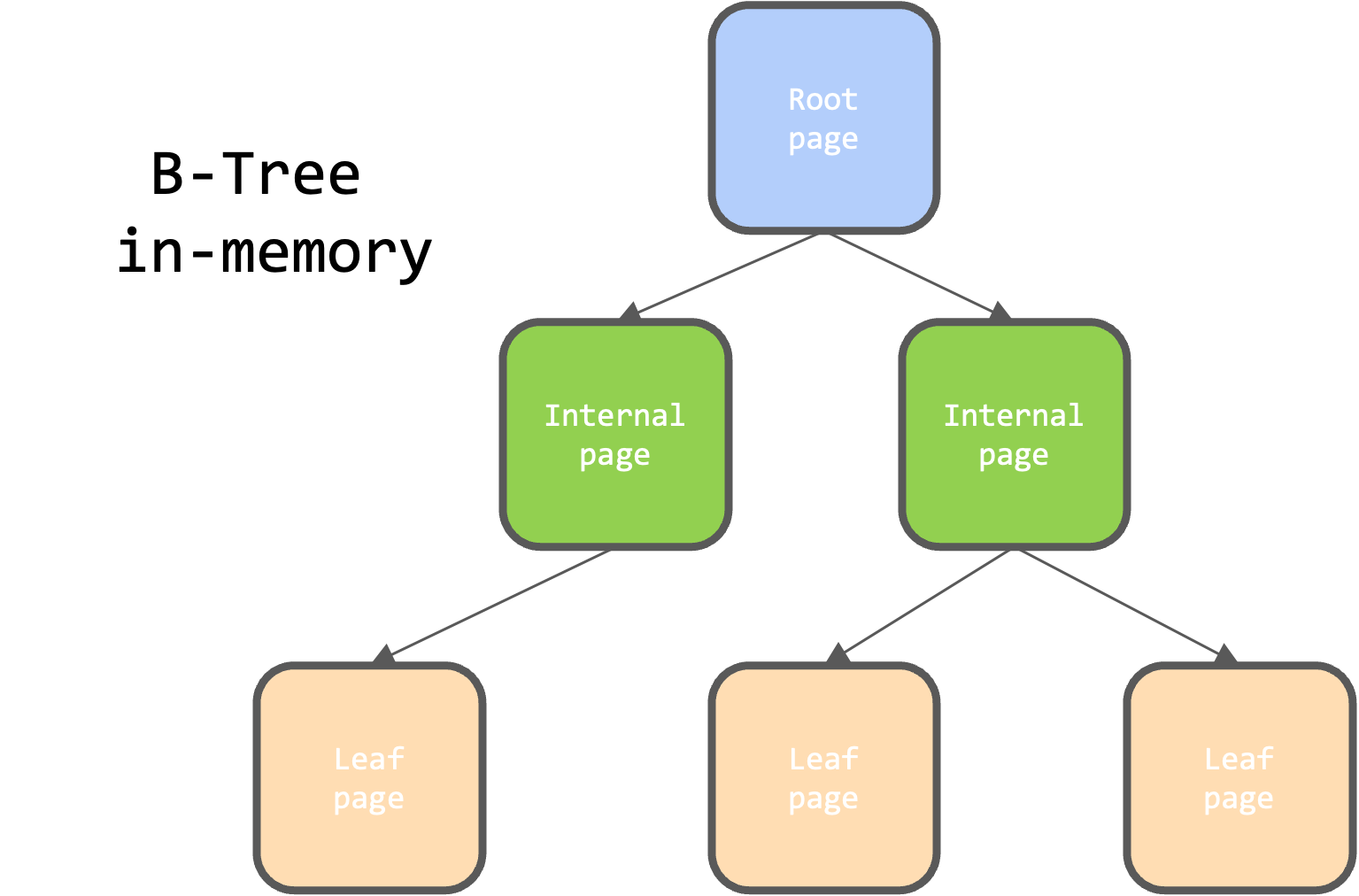

WiredTiger, a high-performance storage engine used by MongoDB, employs an in-memory B-tree layout to organize and manage data efficiently. The B-tree structure ensures quick and efficient access to data, supporting a variety of database operations such as searches, inserts, updates, and deletions.

- Root Pages

- Contains references to internal pages, facilitating the initial navigation through the tree.

- Internal Pages

- Each internal page points to either other internal pages or leaf pages.

- They help in navigating the tree structure by narrowing down the search path to the relevant leaf pages.

- Leaf Pages

- The actual data records (key-value pairs) are stored.

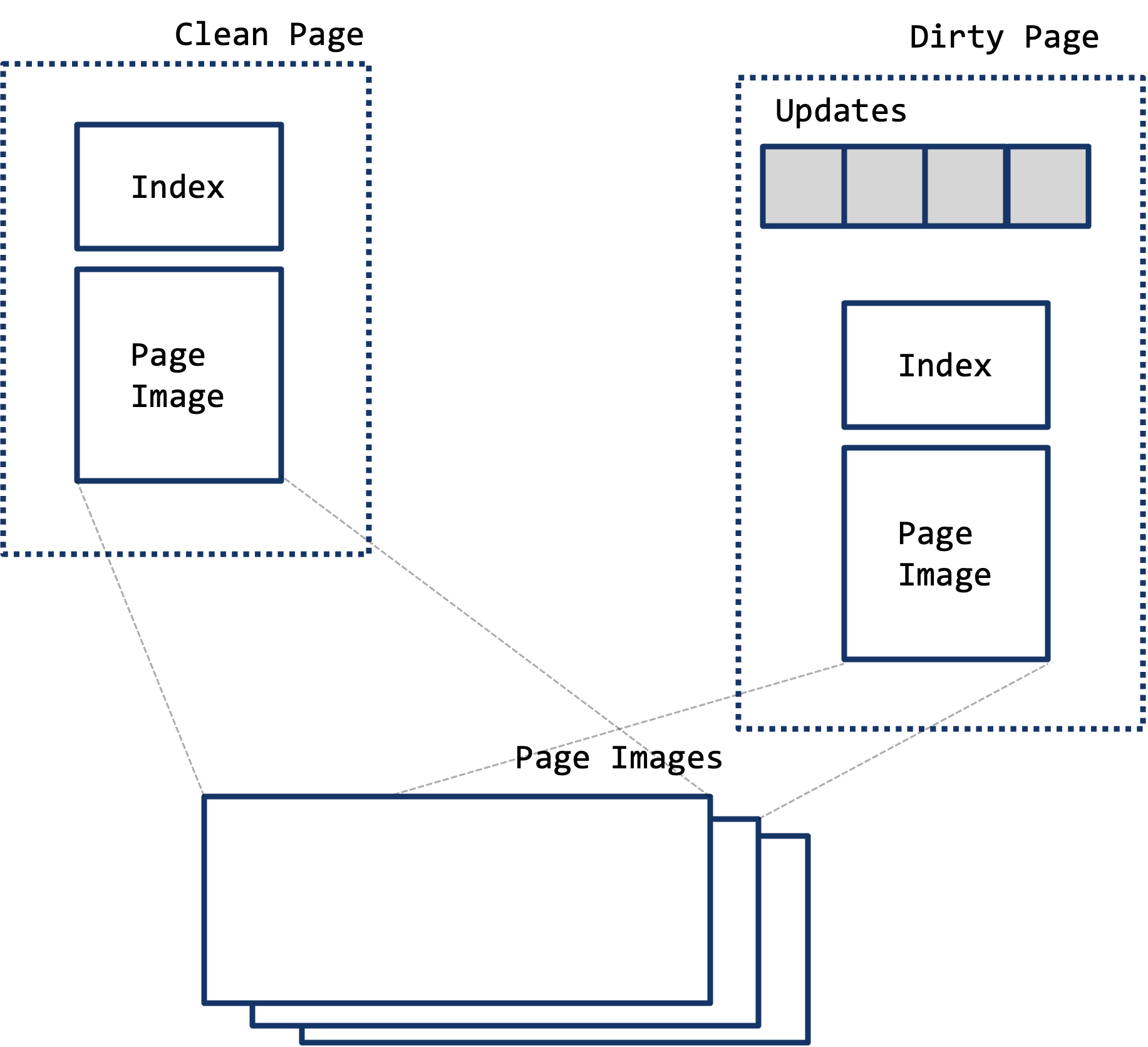

When a page is loaded into the cache, WiredTiger constructs an internal structure tailored to the page type. For a row-store leaf page, this includes an array of

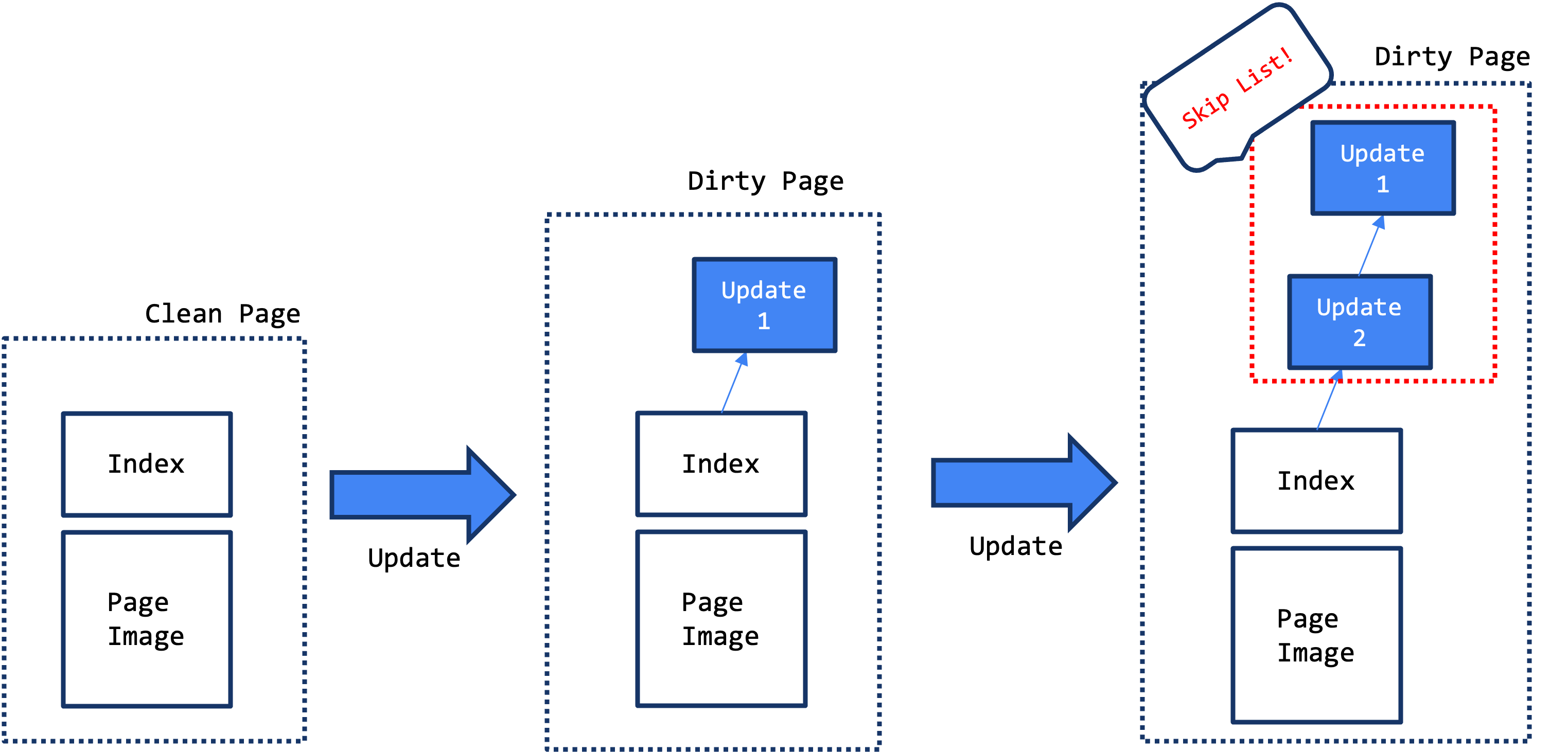

When a page is loaded into the cache, WiredTiger constructs an internal structure tailored to the page type. For a row-store leaf page, this includes an array of WT_ROW objects, each representing a key-value pair. The page also maintains an update history through update buffers. These structures ensure that both current and historical data versions are accessible within the same page.

Each record update creates a WT_UPDATE structure, which is linked to form an update buffer. This buffer allows WiredTiger to manage multiple versions of a record concurrently, supporting MVCC by enabling transactions to access the most appropriate version of the data based on their needs.

MVCC enables different transactions to access different versions of the same data without locking conflicts, thereby improving concurrency. As updates occur, new versions are added to the update chain, while older versions remain accessible for long-running transactions, ensuring that readers do not block writers and vice versa.

MVCC enables different transactions to access different versions of the same data without locking conflicts, thereby improving concurrency. As updates occur, new versions are added to the update chain, while older versions remain accessible for long-running transactions, ensuring that readers do not block writers and vice versa.

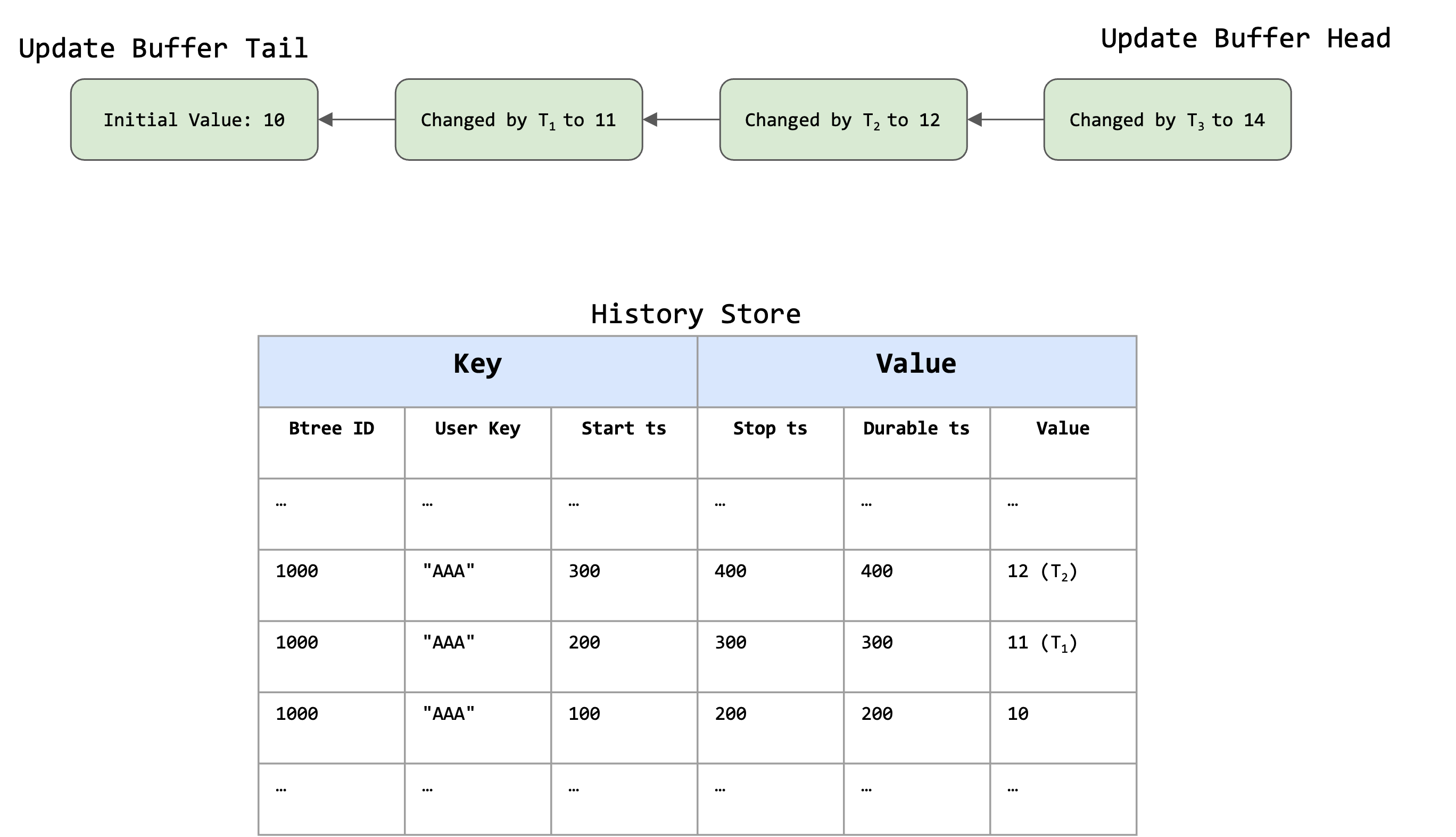

2.1.3. History Store

To efficiently manage the storage of multiple versions of data on disk, WiredTiger uses a specialized History Store. This store serves as a dedicated on-disk location for older versions of data that are no longer needed in memory but are still required to support MVCC for long-running transactions or read operations.

Components

Btree ID:

Indicates the identifier of the B-tree to which this record belongs.User Key:

The key of the record in question, which is"AAA"in this case.Start ts:

The timestamp when this version became valid.Stop ts:

The timestamp when this version became obsolete (i.e., replaced by a newer version).Durable ts:

The timestamp at which this version was confirmed to be durable on disk.Value:

The actual value stored for this version.

Purpose

- When a record is updated and its previous version is no longer needed for immediate access, WiredTiger moves that version from the in-memory structures to the History Store.

- This helps keep the memory footprint of the cache manageable while ensuring that historical versions of data remain accessible if needed.

Managing Space

- The History Store allows WiredTiger to offload old versions from the main data files, freeing up space in the cache for newer data.

- This improves overall efficiency by balancing the need to keep recent data in memory with the ability to retrieve historical data when necessary.

Reconciliation and Eviction

- During the reconciliation process, if a page contains old versions of data that are no longer necessary for active transactions, those versions are moved to the History Store.

- This also occurs during eviction, where pages containing old data are evicted from the cache, and their historical versions are written to the History Store before being removed from memory.

By integrating the History Store with the MVCC and eviction processes, WiredTiger ensures that MongoDB can efficiently manage both current and historical data, enabling high concurrency and performance.